AI & the Architecture of Trust

Developed as part of Brown University’s Effective and Ethical AI program (Spring 2025), revised for clarity. This case analysis explores how communication design and governance shape responsible AI implementation.

What Sephora and Samaritans Teach Us About Responsible Implementation

Artificial intelligence offers powerful opportunities to expand services, reduce workloads, and strengthen relationships between organizations and the people they serve. It’s no wonder there is justifiable excitement—and significant hype—surrounding AI’s potential.

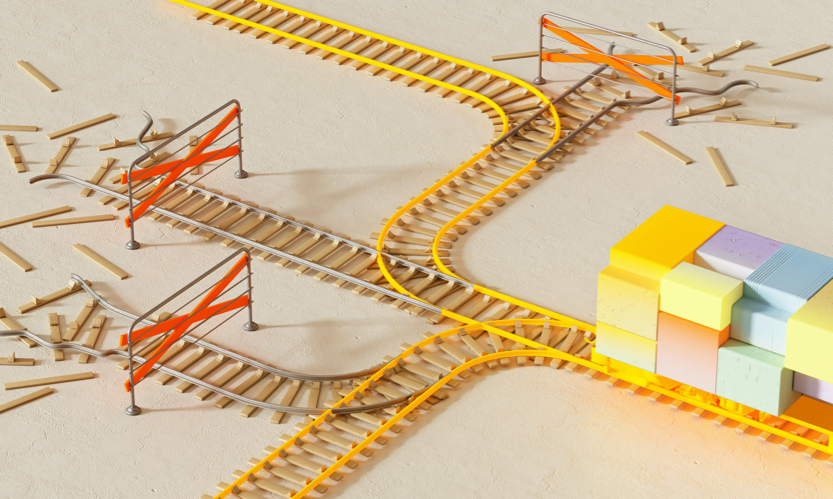

But even the most serious business and nonprofit leaders are vulnerable to overly optimistic projections that aren't grounded in system-awareness or process design. The past two years of mass layoffs in the tech industry, often justified by promises of AI-driven efficiency, are a stark reminder that speed without structure can have profound human consequences. In the rush to adopt AI, it's easy to overlook the invisible scaffolding of communication practices, trust structures, and ethical review that determines whether an initiative will succeed or cause damage.

Without thoughtful systems in place, organizations risk undermining their mission and alienating the very communities they hope to serve. We've seen how quickly trust can erode when implementation decisions move faster than governance or consent.

This paper compares two real-world examples: Sephora’s successful rollout of AI-powered customer support tools, and the Samaritans’ failed launch of the “Radar” suicide detection feature. Together, these cases illustrate how communication and trust—and not only technology—are the foundations of responsible AI use.

“AI will amplify whatever foundation it’s given... Responsible implementation is about coherence, not speed.”

Sephora: A Customer Support Success

Sephora’s AI tools—chatbots and recommendation engines—support customers in stores and online by offering product suggestions and answering routine questions. Unlike many organizations racing to adopt AI, Sephora grounded its rollout in clear workflows, staff training, and human oversight. Employees were prepared to explain the technology and remained central to the customer experience.

Crucially, Sephora also right-sized its assumptions. Unlike tools in clinical or crisis settings, their AI operated in a lower-stakes domain with clear outcomes. Beauty advice may be personal, but it is rarely life-altering. This bounded scope allowed Sephora to define a useful, low-risk role for AI without replacing human judgment.

This restraint contributed to its success. By maintaining a clear distinction between machine support and human care, Sephora strengthened both staff and customer trust—while reinforcing the brand’s values of personalization and service.

Samaritans Radar: A Well-Intentioned Failure

In stark contrast, the UK mental health charity Samaritans launched an AI tool in 2014 called Radar, which scanned public Twitter posts for signs of suicidal ideation and notified the user’s followers. The goal was to alert friends and encourage support. However, the tool failed to secure user consent or communicate clearly how the data would be used. Many users—especially those in the mental health community—viewed the tool as a violation of privacy. Radar launched on October 29 and was suspended just over a week later, following a tidal wave of backlash.

Samaritans is a service-driven organization, and its tool was created with good intentions. But it relied on a troubling and common assumption that public expression implies enduring consent. Access to someone’s vulnerability—even if technically public—is not the same as permission to act.

Radar’s design skipped the most important step: asking whether the people it sought to protect wanted this form of help at all. Its communication failure was both strategic and ethical, rooted not only in technological optimism but also in the culture of speed that has shaped digital development for decades. In tech, we’ve come to take for granted the idea that it's acceptable to move fast and break things—especially if you can apologize later. And when it comes to mental health and human dignity, that mindset causes harm before it can drive innovation.

Comparative Lessons & Takeaways

While Sephora built internal and external trust through clarity and inclusion, Samaritans bypassed that trust entirely. These cases illustrate that communication isn’t just a support function—it’s a critical part of AI design and deployment. Especially for small organizations, external pressure to “keep up” with AI trends can make it tempting to move forward without deep systems thinking. But as these cases show, skipping foundational communication and governance can quickly erode trust and damage reputation.

For small businesses and nonprofits, the lesson is clear: AI must be implemented with transparency, feedback loops, and empathy. Organizations should actively involve employees and users early in the process and treat AI as a system that reflects their values—not a plug-and-play solution. Implementation pace must be intentional, and guardrails must be proactively defined.

Conclusion

AI isn’t just a search window that responds to inputs. It powerfully augments and reflects the assumptions, values, and pace of the systems into which it is introduced. Sephora’s success wasn’t only about choosing the right technology; it was about right-sizing its role, maintaining appropriate boundaries, and communicating clearly with staff and customers. By contrast, Samaritans Radar failed not because of malice, but because it skipped the quiet architecture of consent, community engagement, and contextual humility.

Responsible AI implementation is about coherence, not speed. And coherence requires care: care in design, care in rollout, and care in understanding the human consequences of automation. We live in the long shadow of a tech culture that prizes iteration over introspection—where moving fast and breaking things has been normalized for decades. But in environments of emotional vulnerability and trust, what gets broken may not be so easily repaired.

AI will amplify whatever foundation it’s given. When that foundation is ethically grounded, clearly communicated, and culturally aware, AI can extend what’s best about human service. But when that foundation is fragmented or assumed, even well-meaning systems can quickly do serious harm.